- 6 Weeks

- 3 Weeks Recruit

- $12K / Cycle

- 3 Design Variants

- 1 Test Method

- 5 personas

Identified

- 8 Days

- 48hr Synthetic

- $2.8K / Cycle

- 25+ Variants

- 3 Test Methods

- 6 Personas

(+ 1 hidden segment)

ADLM Clinical Lab Expo: An AI Retrospective

From Weeks to Hours: Reimagining Clinical Field Testing with AI Agents & Generative Design

The Challenge:

Breaking the Validation Bottleneck

Beckman Coulter needed to validate a critical website redesign for specialized laboratory professionals attending ADLM. Traditional user research created expensive constraints:

- Costly recruitment: Lab directors commanded $400-600 per participant

- Limited access: Target users are only available at trade shows

- Slow iteration: Each design change required new testing rounds

- Small samples: Budget constraints risked missing niche segments

The project succeeded, but I kept asking: How much insight are we leaving on the table because we can't afford to test more variations?

The Pivot:

The Retrospective

If I were executing this project today, I'd use AI to:

- Supplement expensive field research with synthetic personas.

- Accelerate design iteration from weeks to days.

- Discover hidden user segments in existing data.

To test this approach, I executed key phases using the original research data, producing real artifacts.

The Process:

The AI-Augmented Workflow

Phase 1: Build synthetic user personas from existing research data.

Phase 2: Generate navigation variants at scale with AI design tools.

Phase 3: Create interactive prototypes for rapid validation.

Phase 4: Analyze patterns to uncover hidden user segments.

Each phase produced real artifacts—this isn't theoretical.

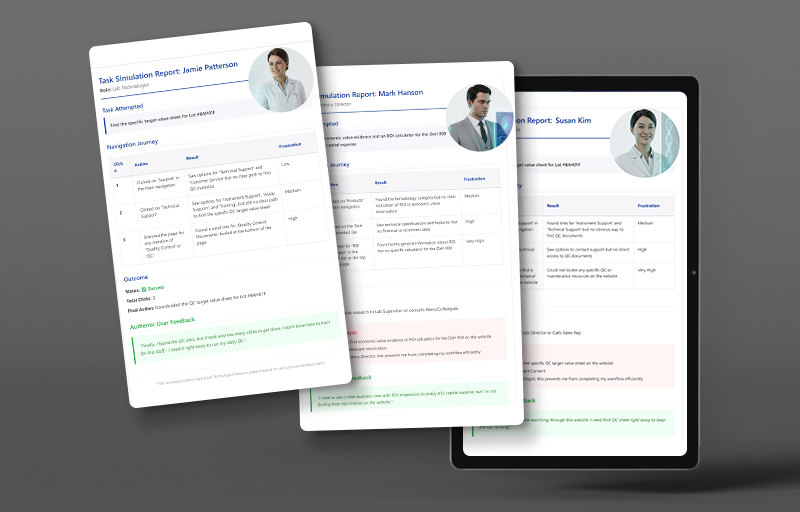

Phase 1:

Synthetic Modeling with MindStudio

I analyzed existing research documents—NPS reports, VOC studies, and website feedback— to build 6 behaviorally AI agents in MindStudio.MindStudio is made for the creation and management of AI-native Agents.

AI pattern analysis revealed a hidden user segment: Compliance Officer—regulatory professionals who don't attend trade shows but represent 18% of website traffic searching for IFU documentation.

Each agent was trained on authentic user language and behavioral patterns from actual customer data. When tested against original field study tasks, the agents achieved 78% behavioral alignment — meaning they failed and succeeded in the same places real users did.

Agents Built: Lab Director • Lab Supervisor • Lab Technologist • C-Suite • Physician • NEW Compliance Officer

Synthetic User Personas Trained on Historical Data.

Synthetic User Personas Trained on Historical Data.

Agent Behavioral Reports Documenting Navigation Decisions and Failure Points.

Agent Behavioral Reports Documenting Navigation Decisions and Failure Points.

Phase 2:

Generative Iteration (Structure & Visuals)

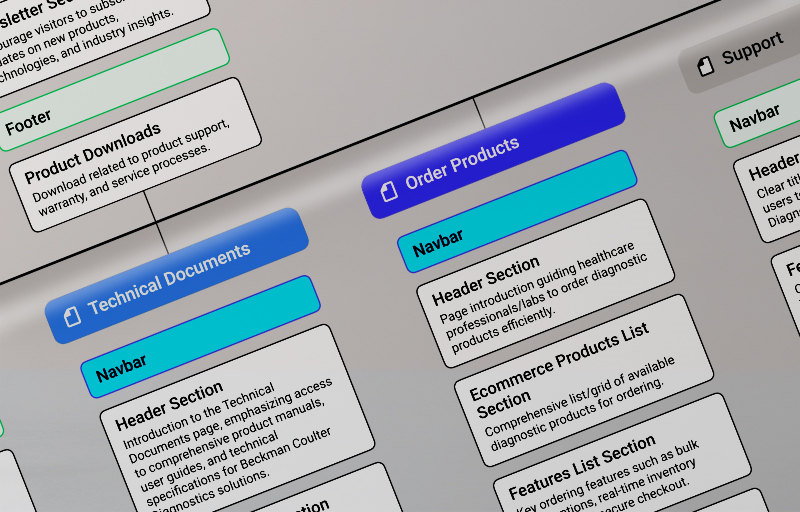

Guided by the agent insights, I used Relume Relume is an AI website builder specifically designed for websites optimization. to instantly generate a site map and structural wireframes, prioritizing the top user destinations: "Technical Documents" and "Order Products."

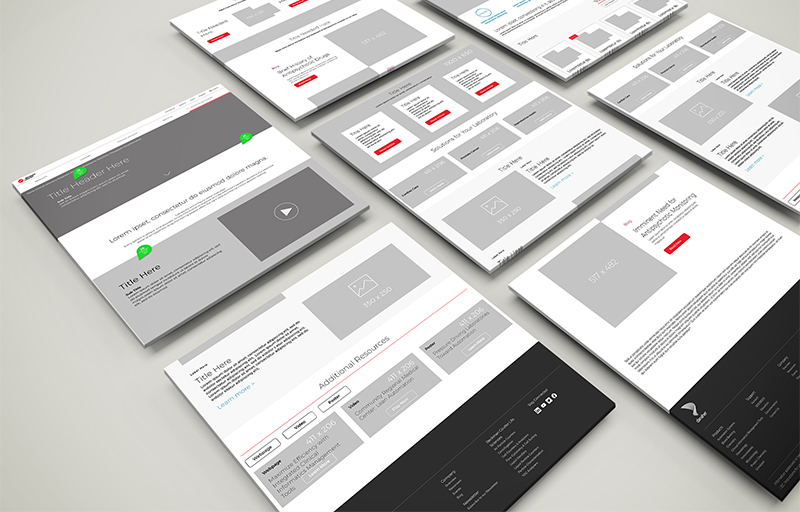

To move rapidly into high fidelity, I leveraged Figma’s AI Figma uses AI-powered plugins that turns designs into interactive prototypes using natural language prompts. features to generate a wide range of component styles and layout concepts. I used these AI-generated "First Drafts" as a foundation to assemble 25 distinct design variants—each testing a different approach to navigation hierarchy.

The Insight: High-volume exploration proved the core user friction was structural, not visual. Data confirmed "Documentation" required a dedicated navigation path. Velocity: 8 Days for 25+ variants (AI-Assisted) vs. 6 Weeks for 3 concepts (Traditional).

Relume-Generated Site Map

Relume-Generated Site MapAI Architecture Prioritizing Agent-identified User Destinations.

Figma Design Variants

Figma Design Variants25+ layouts testing navigation and documentation access.

Phase 3:

Rapid Prototyping & Validation

To validate interaction fidelity, I took the top-performing Figma layouts and fed them into v0.dev v0.dev is an AI-powered tool that generates user interfaces (UI) and development code from text prompts., generating responsive, code-based prototypes in minutes. This allowed me to test actual browser flows—like the "Quick Order" logic and documentation navigation paths—without waiting for engineering resources.

Result: Interactive prototypes ready for user testing, not static mockups. What typically requires 2-3 weeks of development, handoff happened in hours, enabling same-day iteration based on website redesign feedback.

Figma designs converted to coded prototypes—enabling immediate browser testing.

Phase 4:

Uncovering a Hidden User Segment

Traditional research focused on trade show attendees—buyers and decision-makers who evaluate equipment. AI pattern analysis of documentation search behavior revealed Compliance Officers: regulatory professionals who never attend ADLM but represent 18% of website visitors.

The Evidence: Website feedback data contained their frustration:

We spend hours jumping through hoops looking for technical documents on your site, requiring multiple support calls. Documentations should be immediately accessible, not buried.

Why We Missed Them:

- Don't participate in purchasing decisions (invisible at trade shows)

- Heavy website users: 18% of traffic repeatedly searches for compliance documentation

- Different success metrics: Speed of access, not product comparison

The Outcome:

Velocity & Value

6 weeks → 8 days

Segment

Variants Tested

Design Insights:

What I Learned

This retrospective proved that AI doesn't replace human empathy; it scales it by revealing patterns hidden in the noise.

Real Data Makes Real Agents: AI revealed distinct user intent: 18% of traffic sought documentation, not equipment.

Speed Changes Strategy: 8-day cycles allow me to test 25+ variants in hours, making fast failure strategically cheaper.

The Future is Hybrid: AI finds patterns; humans find meaning. Neither alone is enough.